Artists and activists participate in a Campaign to Stop Killer Robots event outside Germany’s parliament, February 2020. © 2020 Human Rights Watch

When I was a child, my parents used to buy me the Eagle comic which I loved. It was full of information, as well as exciting stories. One of its major cartoon strip heroes was the space traveller, Dan Dare, with his sidekick, Digby. Their (and planet Earth’s) most deadly foe was the Mekon, a small green figure with an enormous head who travelled about on a floating platform and who led his people, the green-skinned Treens, to wage war against humankind. Looking back, the Treens were an odd lot.

Aggressive, of course – they were the dreaded enemy after all – but they seemed to be all male, in which case it’s odd that they successfully reproduced, assuming they did. Heartless and unfeeling they certainly were, but they were not machines. The Mekon was a terrifying little figure with an uncomfortable resemblance (apart from his green colour) to the British Prime Minister’s current advisor, Dominic Cummings. Both tend to bark orders at people and refuse to listen to anyone else’s point of view, with an unshakeable belief in their right to be in charge. The Mekon’s aim is always to conquer and take over the universe, an ambition that some in government have said Cummings shares. In one story (there were many), the Mekon had created an army of killer robots that he called Electrobots, described in the text as ‘mechanical monsters’, that only obeyed his voice (surely the dream of many of today’s more autocratic leaders). They were defeated when a young cadet impersonated the Mekon’s voice and instructed them to destroy themselves. Perhaps it’s a technique we should bear in mind, because what are called ‘lethal autonomous weapons’ that take their own decisions about whom to kill are becoming a reality.

You may recall the Arnold Schwarzenegger films about The Terminator, a cyborg killer sent back in time to kill the mother of the human leading the future resistance to robots that have taken over the world. The story revolves around an entity called Skynet, an artificial intelligence that grows to see humans (not unreasonably) as unreliable and remarkably messy, so it instigates a nuclear war to wipe out people and allow it to take total control of the planet Earth. Back in 1984, when Terminator was made, such ideas were purely science fiction. Hopefully, they still are in the main, but each country tries to keep secret its work on artificial intelligence and there is no doubt that research is being carried on into weapons that can take their own decisions on whether or not to kill. Some already exist. In 2017, President Vladimir Putin told students in Moscow of his belief in Artificial Intelligence. “It comes with colossal opportunities, but also threats that are difficult to predict. Whoever becomes the leader in this sphere will become the ruler of the world.” China has the same belief and has set itself the goal of achieving that dominant rôle by 2030, although nobody knows how far along that road it has progressed. I’ve never understood why anyone thinks they should run the world when most of those who do have already made such a confounded mess of their own countries.

NATO’s Assistant Secretary-General for Emerging Security Challenges, Dr. Antonio Missiroli, writing in a personal capacity, reminded his readers that Putin had already announced the successful testing of Russia’s new ‘hypersonic glide’ vehicle, capable of flying at 27 times the speed of sound and the fastest missile in the world by far, while In September 2019, Houthi rebels from Yemen used a massive coordinated drone attack on two oil production facilities in Saudi Arabia, having successfully evaded Saudi air defence systems.

Russian Tsirkon hypersonic missile

Russian Tsirkon hypersonic missile

AI is also thought to have been involved in deliberate cyber-attacks on medical care facilities during the COVID-19 crisis. In our interconnected world, the use of AI in attacks targeted against supposed enemies is known as ‘net-centric warfare’, and we’re going to have to get used to it. “The 21st century has in fact seen a unique acceleration of technological development,” writes Dr. Missiroli, “thanks essentially to the commercial sector and especially in the digital domain – creating an increasingly dense network of almost real-time connectivity in all areas of social activity that is unprecedented in scale and pace. As a result, new technologies that are readily available, cleverly employed and combined together offer both state and non-state actors a large spectrum of new tools to inflict damage and disruption above and beyond what was imaginable a few decades ago, not only on traditionally superior military forces on the battlefield, but also on civilian populations and critical infrastructure.” Where is Arnold Schwarzenegger when you need him?

The Secretary-General of the United Nations, António Guterres has expressed concern, not so much about AI itself but about the direction in which the research seems to be going. In a message to the Group of Governmental Experts in March 2019, the UN chief said that “machines with the power and discretion to take lives without human involvement are politically unacceptable, morally repugnant and should be prohibited by international law”. He insisted that no country is actually in favour of ‘fully autonomous’ weapons that can take human life.

Secretary-General of the United Nations António Guterres © UN Photo/Eskinder Debebe

Secretary-General of the United Nations António Guterres © UN Photo/Eskinder Debebe

To be perfectly honest, that seems like wishful thinking; some undoubtedly are. He is certainly keen for progress to be made on the control of ‘lethal autonomous weapons systems’, or LAWS. There is an existing agreement, the lengthily-titled Convention on Prohibitions or Restrictions on the Use of Certain Conventional Weapons Which May Be Deemed to Be Excessively Injurious or to Have Indiscriminate Effects, whose acronym would be the CPRUCCWWMBDBEIHIE. If you haven’t heard of it, that title may be the reason why; it’s not even pronounceable. In any case, it dates from 1980 and entered into force in December 1983, before AI was as advanced as it has since become. Even so, it has been signed by 125 state parties, so the various would-be developers of killer robots (or LAWS, if you’d rather) presumably adhere to it, or at least say they do. The acronym LAWS sounds a little euphemistic for what it represents, I think.

OUT-SMARTING A COMPUTER

It was in 1950 that Alan Turing, the English mathematical genius whose pioneering work on cryptanalytics helped break the Nazi naval ciphers, suggested a test for AI. Known as the Turing Test, it involves somebody holding a 3-way conversation with a human and a computer, without being told which is which. The conversation would be in text only, such as through a computer keyboard. If it’s not possible to tell which reply comes from a human being and which from the computer, then that computer has passed the test. It was called ‘the imitation game’ and was designed to answer his question: ‘can machines think?’ Incidentally, Turing also developed a general-purpose computer he called the bombe, which can still be seen at Bletchley Park in England, where Turing and the other code-breakers were based. It greatly speeded up the analysis of messages encoded on the Nazi Enigma machine. More recently, Google announced its new Assistant, developed with its Duplex technology. Its voice sounds decisively human and it can even make restaurant or hairdresser appointments, for instance, without the person at the other end ever realising they’re talking to a machine. It does that partly by including the ‘ums’ and ‘ers’ of normal speech – the little errors we all make – just as Turing said it should, all those years ago. But this is a tech giant trying to take over the market, not a government trying to take over the world. It may book you a meal, but it won’t come around and shoot you or blow up your home. Sadly, there are people working on designing AI that can and will do both.

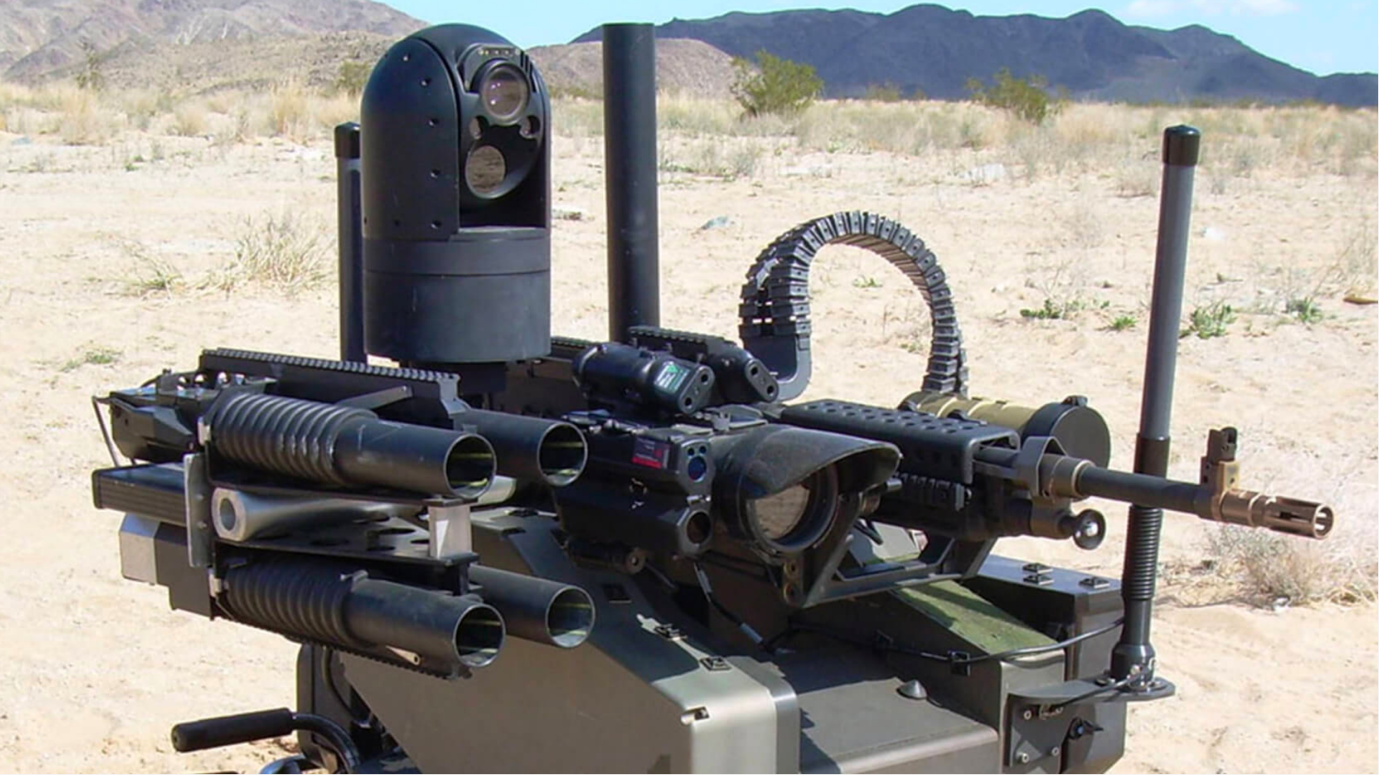

The 9A52-4 Russia’s Killer Robot Rocket Launcher

The 9A52-4 Russia’s Killer Robot Rocket Launcher

Writing on the Theatrum Belli website in August 2019, Dylan Rieutord casts doubt, intentionally or otherwise, on a rôle for the human dimension in combat decisions. “If the decision-making loop will still be a reality by 2030, that is to say that Man remains in the loop and at the center of the decision, some such as the United States, Russia, or Israel demonstrate claims to total autonomy for their robot armies.” It’s a terrifying thought: people are inventing the machinery to march (or roll or fly) into your country and kill you without inventing Arnold Schwarzenegger first. Those countries that fear they may get left behind, writes Rieutord, are also playing catch-up. “Ukraine, Estonia, China, Turkey, Iran, Iraq are robotized, trivializing and encouraging this new race to armament plagued by difficulties due to the terrestrial environment for a military application of robotics.” It’s a funny thing, I’ve been on documentary shoots in which a cameraman has used a sophisticated professional drone that can return to its launch point faultlessly in the event of problems.

They’re handy for aerial shots and arguably get over-used in a lot of modern TV drama. But neither I nor the cameraman had any intention of killing people, merely filming them, and with their cooperation. The fact that it’s so easy to do that suggests that replacing the camera with a rocket launcher would be feasible and quite simple. But he or I would have still retained control; the race is now on for weapons without a human input. You wouldn’t want something like that to short-circuit or to misunderstand instructions. Are you sure you want to give verbal instructions to Siri or Alexa? What if they turn against you? I recall an excellent example, quoted in a book called Autocorrect Fail, of a conversation by text, one end of which was being dictated through one of those computer voice assistants, such as Siri or Alexis. 1st speaker: “Are you ready?” Reply: “Just lemon Parkinsons”. 1st speaker again, puzzled: “Hello?” Reply: “Lemon pork knee pie”. 1st speaker, getting frustrated: “Dude, you gotta give up on Siri”. Reply: “Lucky ship. I donut a swan with this phone.” Now, if that had been instructions to an autonomous weapons system, it could have been disastrous. Imagine if the instruction “proceed at measured pace” was understood as “protester: spray with Mace”. Or if an overheard comment such as “I’m afraid I’m going to puke” turned into “Raid the place, then nuke”.

It’s a development that worries the European Council on Foreign Relations. It points out that it’s very hard to define exactly what LAWS are, but basically, says the ECFR, they’re weapons that can be airborne unmanned drones, underwater robots or missile defence systems or even cyber weapons.

A Royal Air Force drone armed with a missile, working autonomously without any human control

A Royal Air Force drone armed with a missile, working autonomously without any human control

Their decisions are governed by AI. The future looks worrying. “Intelligent, fully autonomous, lethal Terminator-type systems do not exist yet,” says the ECFR, “but there are hundreds of research programmes around the world aimed at developing at least partly autonomous weapons. Already in use are military robotics systems with automated parts of their decision cycle.” There are bodies determined to stop the development of autonomous weapons, such as the International Committee for Robot Arms Control (ICRAC) and the Campaign to Stop Killer Robots (CSKR). There are some tentative signs of progress, according to the ECFR, but they’re very tentative, as this commentary from September 2018 makes clear: “Last week’s meeting, the sixth, ended without the breakthrough that activists had hoped for, namely, the move to formal negotiations about a ban. The document issued at the end of the meeting recommended only for non-binding talks to continue.” I suppose we should be glad they’re talking at all, although it’s hard to take much comfort from a lot of top politicians saying “we’ll think about it” while holding guns behind their backs.

TO BAN OR NOT TO BAN?

Russia, China and the United States are opposed to any total ban on LAWS. After all, they’re thought to be busy developing them. The issue remains a problem for Germany, which sees itself as not really a military power and whose public is uncomfortable with the topic, whilst also wanting to be at the forefront of AI research, and as the ECFR reports “Some of the countries that are reluctant to support a ban on LAWS are worried that such a move could impact on their ability to research and develop other types of AI, as well as some military AI uses they may be interested in further down the line.” AI, after all, could be invaluable in bomb disposal and mine clearance. Even the European Union is not in favour of regulations that might restrict research into robotics, when AI has a great many peaceful civilian uses, quite apart from helping an armed robot to decide whether or not to kill someone.

France is opposed to a ban on lethal autonomous weapons and Germany seems keen to try and please everyone by supporting France in wanting a political declaration about them, rather than a full ban. It has drawn criticism, however. They want a compromise based on regulation, while activists believe Europe should be “leading the charge” for a ban, according to the news site, Politico. The Campaign to Stop Killer Robots group, comprised of 65 non-governmental organizations in 28 countries, was furious: “We are disappointed that Germany has decided to work so closely with France to promote measures less than a ban, and less than a legally binding instrument or a legally binding treaty,” said Mary Wareham, the group’s global coordinator. Stopping research into LAWS though is probably impossible: as in the old book of Arab folk tales, One Thousand and One Nights makes clear, once the genie gets out of the bottle it’s virtually impossible to get him to go back in.

The Stop Killer Robots Global Campaign Coordinator Mary Wareham © Nobelwomensinitiative.org

The Stop Killer Robots Global Campaign Coordinator Mary Wareham © Nobelwomensinitiative.org

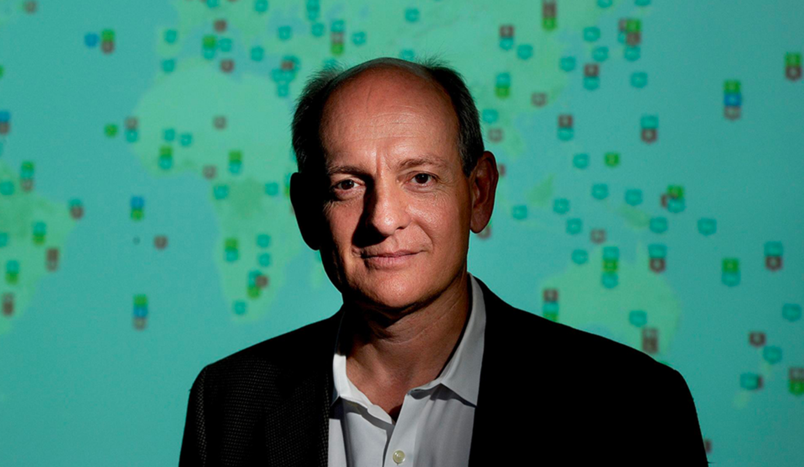

The Campaign to Stop Killer Robots is, as you might expect, unequivocal in its opposition to LAWS. “Fully autonomous weapons would decide who lives and dies, without further human intervention,” says its website, “which crosses a moral threshold. As machines, they would lack the inherently human characteristics such as compassion that are necessary to make complex ethical choices.” Some of the harsh responses to recent protests and demos suggest that not all humans are prepared always to show compassion. In the death of George Floyd, the Minneapolis police showed very little compassion, for instance. But without human intervention, the NGO fears, things will be worse. “The US, China, Israel, South Korea, Russia, and the UK are developing weapons systems with significant autonomy in the critical functions of selecting and attacking targets. If left unchecked the world could enter a destabilizing robotic arms race. Replacing troops with machines could make the decision to go to war easier and shift the burden of conflict even further on to civilians. Fully autonomous weapons would make tragic mistakes with unanticipated consequences that could inflame tensions.” Another NGO, simply called Ban Lethal Autonomous Weapons, has on its website a terrifying short video called Slaughterbots about tiny killer drones that can be released in swarms to kill anyone chosen by those who unleash them. I recommend you to seek it out. In the case illustrated, they attack and kill a group of peacenik students for talking about pacifism. It might class as science fiction, but only just. At the end of the video is a statement from Stuart Russell, Professor of Computer Science at the University of California, Berkeley, who has been working on AI for more than 35 years. “Its potential to benefit humanity is enormous, even in defence. But allowing machines to choose to kill humans will be devastating to our security and freedom,” he said.

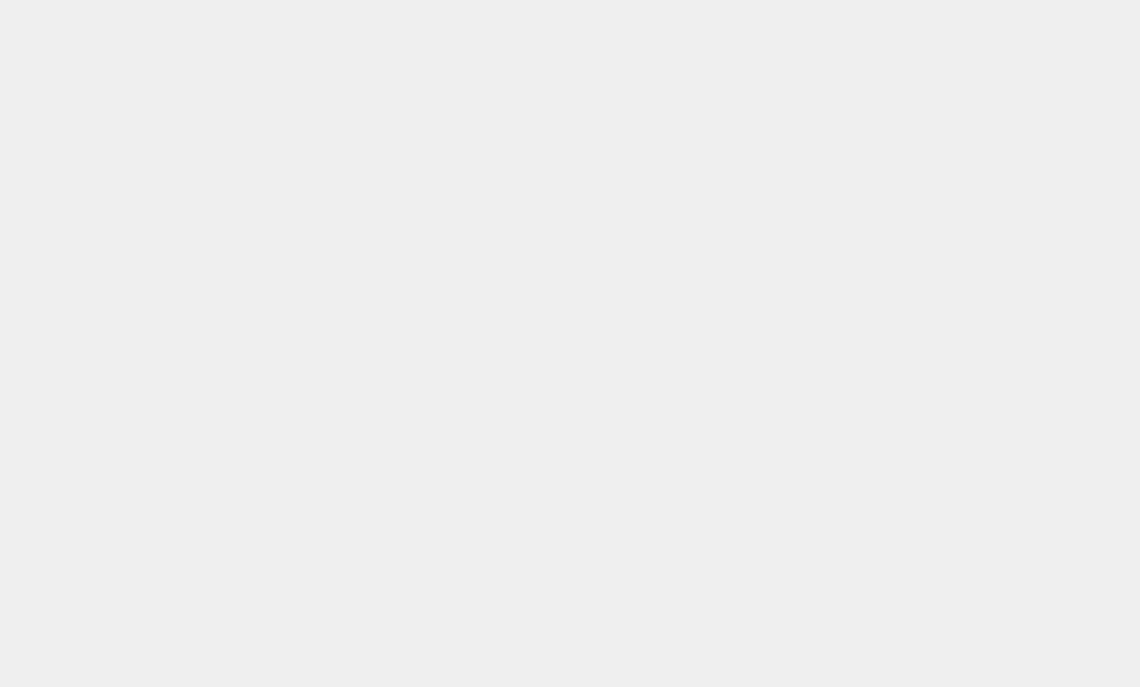

Killer robot Rmt Fellaga

Killer robot Rmt Fellaga

Killer robots have to rely on facial recognition software, widely used by law enforcement agencies in some countries, especially in China but to a lesser extent in the US and UK. Accuracy rates have improved dramatically in recent years, even reaching 99.97% in some tests conducted under ideal conditions. But if a killer drone is flying around, you wouldn’t want to be the 0.03% it mistakenly believes to be its target. It’s pretty impressive, though, as the Center for International and Strategic Studies reports. “However, this degree of accuracy is only possible in ideal conditions,” it admits, “where there is consistency in lighting and positioning, and where the facial features of the subjects are clear and unobscured. In real world deployments, especially, perhaps, in the chaos of a conflict zone, accuracy rates tend to be far lower. For example, the FRVT found that the error rate for one leading algorithm climbed from 0.1% when matching against high-quality mugshots to 9.3% when matching instead to pictures of individuals captured ‘in the wild,’ where the subject may not be looking directly at the camera or may be obscured by objects or shadows.”

Tests have also shown that the technology can be less reliable with some groups of people. This is especially worrying where driverless vehicles are concerned. They rely on AI and various sensors to drive safely but they still need good weather and calm streets to be safe. The American Civil Liberties Union (ACLU) tested face recognition software on all the members of the US Congress, matching them against a set of mugshots from a database. Twenty-eight of the Congressmen and women were misidentified as being from the database. And that, of course, is using clear and properly-taken photographs. The Project on Government Oversight, POGO, warns that “Numerous studies – including those by MIT, an FBI technology expert and the ACLU – have also found that facial recognition is significantly less accurate when identifying people of color and women. So long as these higher misidentification rates continue, facial-recognition surveillance will constitute not just a threat to the liberty and life of innocent people, but also a serious civil rights concern because it could create de-facto algorithm-based racial profiling.” You may well wonder if this sort of system that should be helping to pick targets for an autonomous weapon. Of course, Google, Apple, Facebook, Amazon and Microsoft are all working on facial recognition software, but Google has dropped out of working on it as part of defence technology because of errors and problems with identifying women and people of colour.

An MQ-1B Predator, left, and an MQ-9 Reaper

An MQ-1B Predator, left, and an MQ-9 Reaper

A Squadron ground control station maintainer, powers a ground data terminal used to transmit signals needed to fly both the MQ-1B Predator and MQ-9 Reaper © Us Air Force

Google employees reacted strongly to the company’s involvement in Project Maven, a US Department of Defence project to use AI-controlled drones for surveillance. As a result, the company decided not to renew its contract when it expired in March 2019. Many employees are against weapons research and Google has since dropped out of the Joint Enterprise Defence Infrastructure (ironically, the acronym is JEDI. George Lucas has a lot to answer for) with the Pentagon, which is worth $10-billion (€8.5-billion) over ten years. The other tech giants are said to be still in the running.

MRK-27 BT with RShG-2 and RPO Shmel is a vehicle intended for bomb disposal equipped with a 7.62mm machine gun, RShG-2 rocket-propelled assault grenades and the thermobaric weapon RPO-A SHMEL © Vitaly V. Kuzmin

MRK-27 BT with RShG-2 and RPO Shmel is a vehicle intended for bomb disposal equipped with a 7.62mm machine gun, RShG-2 rocket-propelled assault grenades and the thermobaric weapon RPO-A SHMEL © Vitaly V. Kuzmin

A scholarly report by the Institute of Electrical and Electronic Engineers (IEEE) reports on the issues: “Sources of errors in automated face recognition algorithms are generally attributed to the well-studied variations in pose, illumination, and expression, collectively known as PIE. Other factors such as image quality (e.g., resolution, compression, blur), time lapse (facial aging), and occlusion also contribute to face recognition errors. Previous studies have also shown within a specific demographic group (e.g., race/ethnicity, gender, age) that certain cohorts are more susceptible to errors in the face matching process.” If it’s done in aid of crowd control, finding a missing person or arresting a criminal then errors can be checked and corrected. If the mistakes are made by a killer drone (or other autonomous weapon) that option doesn’t exist. It’s no good apologising to a corpse.

BRAVELY GOING

In March 2020, the National Science Foundation issued an invitation for proposals for investigation and research into robotics. The NSF gives a rather nifty definition of what they’re after: “For the purposes of this program, a robot is defined as intelligence embodied in an engineered construct, with the ability to process information, sense, and move within or substantially alter its working environment.” It goes on to explain what it’s hoping to achieve, more or less: “The goal of the Robotics program is to erase artificial disciplinary boundaries and provide a single home for foundational research in robotics. Robotics is a deeply interdisciplinary field, and proposals are encouraged across the full range of fundamental engineering and computer science research challenges arising in robotics. All proposals should convincingly explain how a successful outcome will enable transformative new robot functionality or substantially enhance existing robot functionality.”

Stuart Russel Professor of Computer Science at the University of California, Berkeley © berkeley.edu

Stuart Russel Professor of Computer Science at the University of California, Berkeley © berkeley.edu

Of course, autonomous and semi-autonomous robots have a range of invaluable uses. Take, for example, the Curiosity Rover, still creeping over the surface of Mars at 30 metres an hour – long after it was expected to have stopped working – and carrying out varieties of tests. Messages from Earth take between 4 and 24 minutes to arrive, depending on the planets’ relative positions, so Curiosity has to be able to make some of its own decisions about the route, as it has done since 2011 when it joined Opportunity and Spirit. Soon it will be joined by the most advanced robot explorer yet, Perseverance, which, among its other tasks, will be drilling core samples which it will leave on the surface for a planned future mission to collect and bring back to earth. Robots are far better designed to explore alien worlds than we fragile humans will ever be. Also, they can take months-long journeys to other planets without the need for water, food, sleep or sanitary provisions. That makes for much lighter spaceships with more capacity for carrying fuel and equipment; water is heavy, and robots don’t need to go to the toilet.

Russian Platform-M combat robot © Novosti

Russian Platform-M combat robot © Novosti

The danger comes when AI is left to its own devices with total autonomy in which it applies blind logic. Professor Russell cites the example of what’s called the ‘King Midas problem’. Midas wanted everything he touched to turn to gold, according to legend, and that’s what happened to his food, his drink, his family and so on. He died of starvation. Russell suggests as an example of the dangers that you ask AI to find a cure for cancer ‘as quickly as possible’. The AI chooses the shortest route, by infecting every human on earth with cancer and then trying out a wide range of treatments until it finds one that works. That’s not what you asked for but it’s how unfeeling AI may interpret your instructions.

All these concerns, of course, do little to deter those countries determined to follow the route to autonomous weapons which can engage the enemy without putting your own soldiers at risk. So what is out there at present? Well, the US has the Anti-Submarine Warfare Continuous Trail Unmanned Vehicle (ACTUV), Sea Hunter, a catamaran designed to hunt and destroy submarines. Some have said it looks a little like a Klingon Bird of Prey, except without the Klingons, of course. It is unmanned, or ‘un-Klingoned, if you prefer. The US Air Force also has the autonomous X47-b aircraft, which needs no pilot.

Sea Hunter Autonomous Vessel © U.S. Navy photo by John F. Williams

Sea Hunter Autonomous Vessel © U.S. Navy photo by John F. Williams

The Arms Control Association also says that “The (US) Army is testing an unarmed robotic ground vehicle, the Squad Multipurpose Equipment Transport (SMET) and has undertaken development of a Robotic Combat Vehicle (RCV). These systems, once fielded, would accompany ground troops and crewed vehicles in combat, trying to reduce U.S. soldiers’ exposure to enemy fire.” Needless to say, Russia, China and a number of other countries are doing much the same. The Arms Control Association (ACA) is concerned that dehumanising the battlefield risks breaching the international conventions on warfare and needlessly killing civilians while also causing escalation. “For example, would the Army’s proposed RCV be able to distinguish between enemy combatants and civilian bystanders in a crowded urban battle space, as required by international law? Might a wolfpack of sub hunters, hot on the trail of an enemy submarine carrying nuclear-armed ballistic missiles, provoke the captain of that vessel to launch its weapons to avoid losing them to a presumptive U.S. pre-emptive strike?” If the AI we have in everyday use was as fool-proof as its manufacturers claim, everyone would be able to operate the TV remote, not just the teenagers in the house.

China’s new killer robot ship JARI, is designed for remote-control or autonomous operation © Weibo

China’s new killer robot ship JARI, is designed for remote-control or autonomous operation © Weibo

DON’T MENTION THE WAR-MONGERING

When the UN tried to establish a mandate for debating the Convention on Certain Conventional Weapons, it was blocked by the US, Israel, Russia and Australia, much to the concern of UN secretary-general, António Guterres. “The prospect of weapons that can select and attack a target on their own raises multiple alarms – and could trigger new arms races,” he warned. “Diminished oversight of weapons has implications for our efforts to contain threats, to prevent escalation and to adhere to international humanitarian and human rights law. Let’s call it as it is. The prospect of machines with the discretion and power to take human life is morally repugnant.” A proposal for two weeks of discussion on the issue was vetoed by Russia. “They insisted that one week was more than enough,” reports the Arms Control Association. “Their case was that ‘our delegation cannot agree with the alarmist assessments predicting that fully autonomous weapons systems will inevitably emerge in the coming years.’ And they have repeatedly said the whole discussion is a waste of time and money because no one is actually developing these weapons.”

The Russian stealth robot ‘suicide’ tank Nerekhta

The Russian stealth robot ‘suicide’ tank Nerekhta

Which makes one wonder why they were showing them off at an arms fare near Moscow, and why President Vladimir Putin and his people have been boasting that autonomous weapons will dominate the battlefields of tomorrow, with Russia (naturally) leading the way. According to the Forbes website: “Sputnik News reported that the Russian weapons maker, Degtyarev, has developed a stealth robot ‘suicide’ tank, the Nerekhta. Once launched it can navigate autonomously to a target in silent mode and then explode with a powerful force to destroy other tanks or entire buildings.” Russia Today quoted President Vladimir Putin: “Whoever becomes the leader in this sphere will become the ruler of the world.” Similar comments have been made by deputy prime minister Dmitry Rogozin and Defense Minister Sergei Shoygu, says Forbes. Viktor Bondarev, chairman of the Federation Council’s Defense and Security Committee, stated that Russia is pursuing “swarm” technology, which would allow a network of drones to operate as a single unit. “Flying robots will be able to act in a formation rather than separately,” he boasted. Whoopee! That’s good news for the rest of us, isn’t it?

President Vladimir Putin and Commander-in-Chief of the Aerospace Forces Colonel General Viktor Bondarev watching demonstration flights by the Russian Air Force © kremlin.ru

President Vladimir Putin and Commander-in-Chief of the Aerospace Forces Colonel General Viktor Bondarev watching demonstration flights by the Russian Air Force © kremlin.ru

And just in case you were wondering, the 6th International Military-Technical Forum ARMY-2020, being held by the Russian Ministry of Defence, is going ahead as planned. As the on-line advertising says, “The International Military-Technical Forum ARMY-2020, which is to be held in August 2020, is going to encompass an exhibition, scientific and business and demonstration programs. Representatives of the Nuclear, Biological and Chemical Protection Troops are going to take part in all planned events.” Meanwhile China has come up with a tiny but deadly warship that is entirely robotic to counter US advances in naval strength, as reported in The National Interest. “The JARI unmanned surface vessel is equipped with a phased array radar, vertical-launched missiles and torpedoes despite its small size of 15 meters [49.2 feet] and low displacement of 20 tons,” said China’s state-owned Global Times, which cited an earlier Chinese state television report. “These weapons are usually only seen on frigates and destroyers with displacement of thousands of tons, and their use on a ship as small as the JARI makes the vessel the most integrated naval drone in the world.” And so it goes on: smaller and smaller, deadlier and deadlier. And less and less inclined to discuss it. It will take more than a cadet who’s good at voice impersonations to save the world.

T. Kingsley Brooks

Click here to read the 2020 August edition of Europe Diplomatic Magazine