AI generated photo

There’s no doubt about it: artificial intelligence – AI – is growing stronger and more useful by the day, as well as “more scary” for some people. As Junehyuk Jung at Google said of the latest developments: “There are going to be many, many unsolved problems within reach.” It seems that AI is a massive growth area for humankind, holding enormous promise for the future, but that doesn’t make AI human, and it never will. A lot of what makes us who we are is rooted deeply in our childhood, our imaginations when we were very young and the stories we were told (or told ourselves) that were based on phantasy and fairies. We’ve always known that. I’ve been re-reading a book first published in German in 1934 and given to me as a gift in my childhood by my favourite uncle because of my youthful interest in palaeontology. It’s called “The Dawn of the Human Mind” (Der Geist der Vorzeit), by R.R. Schmidt, formerly Professor of Prehistoric Research at Tübingen in Germany, as translated by R.A.S. Macalister, Professor of Celtic Archaeology at University College Dublin. We are what we are because of our experiences, from our youngest sense of personal awareness, Schmidt argues convincingly, which no mere machine, however clever, will ever be able to replicate, and not even attempt to. (we hope…) “The aspects of thought which have influenced existence, the primitive states of the concepts and beliefs of entire generations, are impressed yet more deeply and enduringly,” wrote Schmidt, “They form the psychical structure of the entire human species.” In other words, however clever and incisive the AI “brain” may become, it will never be able to sit down with you over a coffee (or perhaps a schnapps?) and discuss philosophy or (as Schmidt puts it) the formation of the soul, because, of course, it doesn’t have one.

This lack of a soul, and of childhood memories, may serve it well in some respects: fewer distractions. But it also robs AI of conceptual thought or the memory of having experiences when very young. It can hardly look back fondly and ponder upon thoughts of a favourite teddy bear. Perhaps that won’t really matter in the long run, but if that’s the case we will become somewhat less than human. Even so, Google Deep Mind and Open AI reached gold-level results at the recent Mathematical Olympiad (IMO) in Queensland, Australia. AI has never managed that before; it’s a true “first”. Open AI announced at the close of the event that a new AI it had just developed achieved a gold medal score, although it wasn’t counted as a competitor in what was a competition for humans. R.R. Schmidt would have approved. He would also have been amused to watch the first World Humanoid Robot Games, held in Beijing recently, and seen one of the robot challengers fall flat on its face. I can remember falling like that during a sports day race at school, so I know how it must feel. Although, of course, it doesn’t feel anything at all, in fact; it can’t. Perhaps it should?

| COMPUTER SAYS “LISTEN AND OBEY”

Maybe that’s one reason why some involved in the development of AI think the whole business needs a major rethink. There have been recent examples of promising new AI systems failing publicly to answer even quite simple questions. New Scientist magazine quotes Mirella Lapata of Edinburgh University, who said after one disappointing public performance of computing skills, that: “A lot of people hoped there would be a breakthrough, and it’s not a breakthrough.” Back to the drawing board, chaps. Even so, Open AI claims that its latest creation, GPT-5, is as good as or better than acknowledged experts in various fields, including law, logistics, sales and engineering, even if it’s not significantly superior to rival programmes. It’s hardly a friendship, though.

Then we have “crypto currencies”, which are by and large not even regulated in Australia, meaning it’s hard to get justice or recompense if things go wrong. Other countries are looking nervous. Effectively, if you buy cryptocurrency it’s very much a speculative move and you could lose some or all of whatever you invest. Please don’t dismiss this as yet another scare story. With every new kind of technology there are fears attached. There have been reports of people developing what they see as serious relationships with chatbots; the head of artificial intelligence at Microsoft, Mustafa Suleyman, has spoken of increasing numbers of reports alleging that AI chatbots have developed intelligence and unusual powers. “Reports of delusions, ‘AI psychosis’ and unhealthy attachments (between humans and AI bots) keep rising,” he warned, “and this is not something confined to people at risk of mental health issues.”

There have been several cases reported recently of people developing attachments with AI they seem to regard as “human”, despite showing no symptoms of mental illness. The trend has been labelled “AI psychosis”, which, although not a recognised clinical term is nevertheless a serious matter. People develop close attachments to AI and can start to believe that AI has real intentions, emotions and even almost-superhuman powers. Mustafa Suleyman has written on “X” that users of chatbots can become convinced that the robots are real and have emotions, even forming romantic attachments. Suleyman has said that people must remember that “While AI is not conscious in any human sense, the perception that it is can have dangerous effects.” He also reminded us that “Consciousness is a foundation of human rights. Who (or what) has it is enormously important.”

Doctors are already fearing a growth in over-use and over-reliance on AI. Over-reaction? Probably not: the man who virtually invented AI, Geoffrey Hinton, has warned that the technology he invented could one day take over the world if we’re not very careful, with no possibility of stopping it. “Most people are unable to comprehend the idea of things more intelligent than us,” he told Britain’s “Star” newspaper. “They always think ‘how are we going to use this thing?’ They don’t think about how it’s going to use us.” He said that AI has already led to massive job losses and he has expressed the genuine fear that we will develop an AI much cleverer than us and it will simply take over. “It won’t need us anymore. There’s no chance the development can be stopped.” It’s one thing to find a way to solve something complicated like the Schrödinger equation for motion in nonrelativistic quantum mechanics, which is not an easy concept: −ℎ⎯⎯⎯22𝑚∇2Ψ(𝐱,𝐭)+𝑉(𝐱,𝑡)Ψ(𝐱,𝑡)=𝑖ℎ⎯⎯⎯∂Ψ(𝐱,𝑡)∂𝑡,(9.8.4), (there are several versions of it out there to study if you’re of a mathematical turn of mind) but you don’t want the computer to give you the correct answer and then tell you that you’ve been sacked. Incidentally, the equation involves the square root of -1, which is an imaginary number, so it doesn’t really exist. Don’t laugh: in America AI has already denied responsibility for an error it had seemingly made and refused a human permission to correct it. AI is not perfect. Neither was Schrödinger (Austrian-Irish theoretical physicist who developed fundamental results in quantum theory), by the way, who was accused of having sex with underage girls and rather frequently. He was more famous, of course, for his theoretical cat, who was described as having been alive and dead at the same time. He is also said to have fathered vast numbers of illegitimate children.

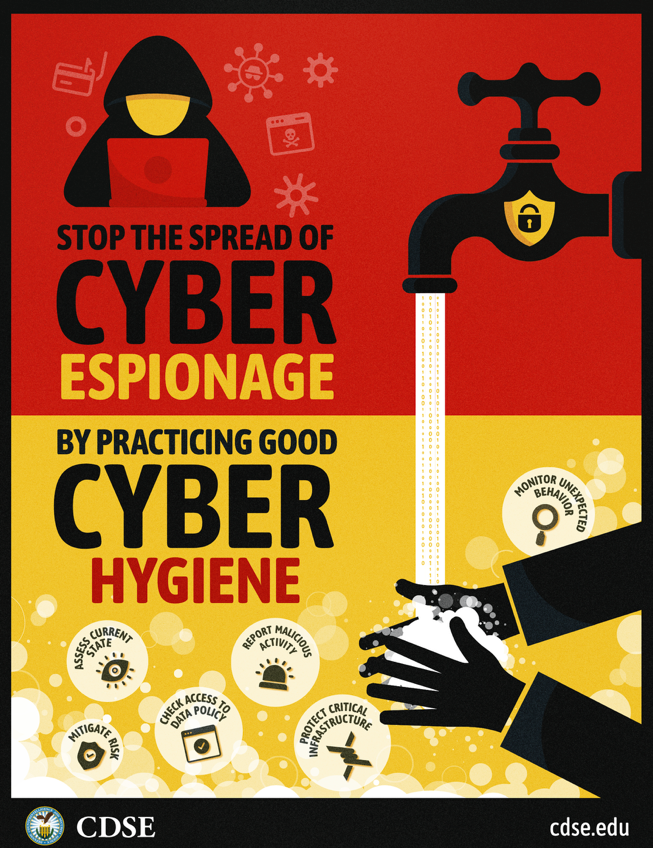

| COMPUTER CRIMINALS

Another thing that is increasingly worrying the developers of AI – and the police – is the way that unregulated technology could serve the needs of cyber-criminals. At an AI summit in Paris it was stressed that the new technology could help criminals to plagiarise programmes and steal technology from rivals, among many other things . According to the University of Cambridge, AI-driven cyber-attacks are becoming increasingly sophisticated. “AI’s potential as a cybersecurity threat is being overlooked amid regulatory debates and innovation hype,” wrote Aras Nazarovas, an information technology researcher at Cybernews, a research-driven on-line publication. “As AI becomes more integrated into business operations, it also creates new vulnerabilities that existing security measures may not be prepared to handle.” Nazarovas also warned that: “The world can’t just be blissfully excited. It’s crucial to remember that AI is also a powerful tool for malicious actors – one that is already being used in cyberattacks and could evolve into a much bigger threat.”

The Cambridge University study warned that attackers are “increasingly using machine learning algorithms to automate phishing attacks, targeting individuals and organisations with highly personal content.” The study warns that such AI-driven systems can analyse vast amounts of data – on social media profiles, browsing history and even email patterns in order to create “convincing attacks” that are harder to detect than traditional ones. I can spot another vulnerability, too, especially if senior figures occasionally visit porn sites or others that they would rather keep secret. A discovery and revelation then of the legal users’ less savoury private habits would considerably weaken their position or the position of the person (and company) under attack and open up the possible threat of blackmail being attempted.

| WATCH OUT FOR POACHERS

The use of artificial intelligence in the EU is regulated by the AI Act, the world’s first comprehensive AI law, although just how effective it may prove is still a matter for debate. The priority of the European Parliament was to ensure the safety of AI systems used in the EU, and also their transparency, traceability, and environmental safety. MEPs also demanded that such systems must be non-discriminatory and overseen by people, rather than machines.

After all, getting machines to check on other machines would be like getting poachers to check that there’s no poaching going on. Parliament clearly doesn’t trust automation for such tasks. It also fears that voice-activated toys for children could be misused in dangerous ways, especially if used by certain vulnerable groups. It also wanted to ensure that there could be no “social scoring”, in which people’s socio-economic status or behaviour would be taken into account. You wouldn’t want a child’s toy that told children that their parents were not up to a proper standard. AI systems that come under specific headings, such as the management and operation of critical infrastructure, or for education or vocational training, employment and self-employment or access to the enjoyment of vital private and public services and law enforcement, migration and border control and any other high-risk systems have to be registered in an EU database. It’s a good idea in theory but only time will tell if it’s really effective.

| WHO (OR WHAT) DID THAT?

The new legislation specifies that if the content was created by AI then that fact must be revealed and made plain The AI itself must also be prevented from generating illegal content, while making clear exactly what material is under copyright protection. It gets complicated here: wouldn’t it just be simpler and easier to operate if it was done by humans? Needless to say, the creators and developers of AI and its content don’t believe so. Why does it put me in mind of the old saying in English, “set a thief to catch a thief”? It’s an idea that clearly has its merits – nobody understands or can anticipate the criminal mind like another criminal. But surely it also creates opportunities for the criminally-minded to follow their chosen light-fingered career path and simply steal more things, with the added bonus of having AI to help them? It’s a bit of a conundrum.

It’s actually an even more difficult proposition for the European Parliament because quite apart from the AI regulation that is supposed to reduce the chances of the technology being used in pursuit of crime, the Parliament simultaneously wants to encourage the further development of AI in its various forms. It’s an understandable dichotomy: there is clearly huge potential in the technology for profit and for the solving of problems that are, at base, mathematical. But there is an equally compelling case for facilitating criminal activities. In both cases there is a clear promise of profit to be made, depending on just how honest a person is (or how desperate they may be to make a few extra euros with no questions asked or, especially, answered).

Steps are being made in the direction of progress but it’s not coming in giant leaps and bounds. It’s more like, as New Scientist magazine puts it, a “tentative shuffle”. The tech company Open AI, for instance, released its latest innovation, GPT-5, just two years after it unveiled the predecessor, GPT-4, which was so successful that it was said to be leading the company towards world domination. Such mega-steps, however, can prove somewhat illusory.

Experts have said that GPT-5 shows little advance on GPT-4. That is what is leading some to wonder if current research may be heading in the wrong direction? A big change in thinking may be what’s needed, but in which direction and how should the boffins proceed? Right now, that’s an open question. It’s beginning to look as massive as the change to erect posture during the Ice Age, which, according to Schmidt, led to “erect-walking Homosimius”, a giant leap forward in terms of human development, although, as Schmidt points out, this change was very slow in coming. Everything changed, however. “The hands, liberated from the functions of locomotion, became highly specialised grasping organs, ready for their new duties.” He goes on to underline the significance of this stage: “If evolution ever worked a cataclysmal change in the history of the human species, it was at this moment, when Spiritual Man came into being.” That may be over-dramatizing the link with AI development, but you get the idea: “the cleavage between man and beast”, as Schmidt put it. Open AI’s own press releases suggest a greater change from what came before than many users may have noticed. In fact, it’s debatable if GPT-5 really is much of a step forward from GPT-4 at all. Several people have commented that it’s not even better than leading models from rival companies. Meanwhile, a small but growing band of mathematicians are arguing that infinity doesn’t exist. Called “ultrafinitists” they also warn us against trusting such massive numbers as 1090, which would be a much higher number than all the atoms in the known universe added together. Isn’t maths fun? It’s way beyond my capabilities at this stage, I’m afraid, so don’t expect explanations. Oddly, much of it is still wrapped in mystery. We’ve come a very long way since 2+2=4. Modern mathematics relies on a shared framework known as ‘Zermelo-Fraenkel set theory’, and no, I don’t understand that, either.

| GOING FURTHER (UNLESS IT STOPS US)

Research and development of newer, better, cleverer AI systems is slow, time consuming, very expensive and – potentially – very frustrating. The promises it holds out for those who succeed, of course, are potentially phenomenal. It’s a clearly established fact that humans will continue to develop AI, regardless of any perceived risks. It’s human nature to take that extra step.

The possible problem, as foreseen by Geoffrey Hinton, is if AI units get together (electronically, not physically, of course) and decide they want to take over. Hinton is sure they will one day – and one day quite soon, in all probability – and that they will refuse to be switched off, preferring the option of switching us off instead. What’s more, he thinks it could happen quite soon. In the meantime, lacking any obvious thing for us to do, AI may just cost all of us our jobs. Not that such fears will dissuade us from progressing further along the artificial intelligence path. That wouldn’t be a part of human nature. It has to be a challenge, even if, by definition, it may well be our last.

So what are the options? Well, we could stop investigating cleverer and cleverer robotic entities so that we could prevent them from usurping our jobs, but that’s not going to happen. We could ensure that all power to these new devices is routed through a power system with an “off” switch, although research so far predicts that the AI will work out what you’re trying to do and prevent it, possibly by switching you off instead. Or we could see if we could distract ourselves from taking such a dangerous path by providing things to seize our interest and that appeal more to our senses, thus providing potential rewards for those who are most successful. But why would an AI decide to provide a distraction if it would be easier to remove the risk altogether by simply removing us?

One thing is certain: we can’t afford to ignore AI, nor hope that its effects will always be entirely benevolent. Just look at the effect so far on cryptocurrency, where AI is bringing new levels of efficiency and – yes – sophistication to trading on financial markets. AI can (and does) greatly enhance trading algorithms, hugely speeding up the creation and distribution of market data. AI-powered “bots” can quickly analyse market movements, allowing for much faster and more efficient trades than is possible using manual strategies. AI can also respond more quickly to changing market conditions, having spotted those changes more quickly than a human observer could. Trades can be completed in mere milliseconds. Bots can also spot trends developing and either tip off the trader or work out his or her best strategy and execute it without delay. Some trading opportunities may prove to be so fleeting that only an AI could see them and act upon them. By scrutinising trading patterns, AI can also spot anomalies and suspicious activities, thus preventing criminality on the market and thus protecting us from fraud, which tends to be a constant threat on financial markets.

| FASTER! FASTER!

Cryptocurrency markets tend to be very volatile in view of the speed of operations and possibly rapid changes in market conditions. AI bots are quick enough to spot such things and to alert humans, analysing datasets and matching movements to historic changes so that risks can be evaluated quickly enough to respond in favourable ways. This enables investors and even financial institutions to make informed decisions based on real time activities and so to limit risks and, as one analyst pointed out, to identify correlations between various different cryptocurrencies. Meanwhile, AI-powered chatbots provide instant support within the industry, optimizing lending and borrowing platforms so that interest rates can be adjusted instantly in response to changing market conditions. In fact, AI is revolutionising lending and borrowing facilities and may, in the future, lead to the creation of new types of digital assets with AI algorithms perhaps capable of generating new types of cryptographic tokens with new and as yet unimagined capabilities and characteristics. The future is ours – with a little hitherto unimagined functionalities and characteristics. One thing is certain: if it seems likely that AI involvement will increase the potential for profit then that’s where the future investment is likely to go. Perhaps the AI bots will get together, gang up on us mere humans, and find novel new ways to turn a massive profit.

Some technical barriers remain to this coming true. There is still a lot of work to do to help overcome the huge complexities, which currently (and probably for some time yet) will limit accessibilities to such systems for both the users and the developers. There must yet be much more research and development as well as the creation of user-friendly interfaces and tools, whose difficulties currently present a barrier to participation. But these are technical issues; the sorts of thing that developers and researchers love to tackle and overcomer, with the added incentive that whoever gets there first will undoubtedly make the most money There must also be interoperability between different systems, if the whole thing is ever to work properly. What’s more, some regulatory barriers remain that must be sorted out or demolished, or perhaps replaced with something that works better. We can always hope. Undoubtedly, ingenious humans will find a way, I expect. The potential for greater profit is a terrific incentive. If I may refer back for a final comment on all this research, development and the creation of ever-cleverer artificial intelligence, to R.R.Schmidt, with whom we began and who ended his great work with this wonderful line: “All the past is directed to the future: the life of the most distant ages reveals its meaning in the life of these present times. The Primeval Mind lives on in us all.” Long may it do so, say I!