AI generated illustration

Artificial intelligence, commonly referred to as AI, has seamlessly integrated into our everyday lives and is changing the way we live, work and interact with technology. But how did it originate, and who were the masterminds behind the development of this ground-breaking technology that has changed our lives so much?

The idea of artificial intelligence has roots that go back to antiquity, where great thinkers and inventors pondered whether it would be possible to construct machines that could mimic human cognition. Despite these early considerations, it wasn’t until around 1900 that significant progress was made in the field of AI.

The term “artificial intelligence” was first introduced by John McCarthy, Marvin Minsky, Nathaniel Rochester and Claude Shannon in the United States during a summer workshop and extended brainstorming session that took place at Dartmouth College, New Hampshire in 1956. This event marked the beginning of artificial intelligence as an independent academic discipline and brought together prominent scientists and mathematicians to explore the possibilities and hurdles of developing intelligent machines.

One of the early pioneers of AI was the British mathematician and computer scientist Alan Turing. Turing is known for his “Turing Test”,” with which he proposed a method for determining whether a machine can exhibit intelligent behaviour that corresponds to that of a human being. His contributions formed the basis for later AI research.

In the years that followed, AI research made remarkable progress, characterised by the development of expert systems, machine learning algorithms and neural networks. These discoveries opened the door to the use of AI in various fields, including speech recognition, image processing and natural language processing.

Even if there is no clear answer to the question of who exactly invented artificial intelligence, the collective efforts of numerous scientists, mathematicians and visionaries have undeniably brought the field to its current form. From the thinkers of antiquity to the researchers of today, the quest to understand and emulate human intelligence remains a strong driving force behind the progress of artificial intelligence.

| EMERGING THREATS IN A TECHNOLOGICAL UTOPIA

The 21st century brought with it a revival of interest and investment in artificial intelligence, fuelled by significant advances in computing capabilities and the accessibility of vast datasets. This era, often referred to as the “AI renaissance”,” has seen extraordinary achievements in various AI applications, including machine learning, natural language processing and computer vision.

The remarkable progress being made today in the field of artificial intelligence is truly astonishing. AI has become a multi-billion dollar industry, attracting significant investment in research and development from technology giants such as Google, IBM and Microsoft, leading to major advances in areas such as autonomous vehicles, virtual assistants and medical imaging, as well as other innovative technologies that were once considered science fiction.

The field of artificial intelligence is being driven by a diverse group of individuals, including researchers, engineers, entrepreneurs and thought leaders. Some of the most influential people in AI include CEOs and founders of major technology companies, leading scientists and innovators who are helping to shape the future of AI. These individuals are contributing to the development of AI through their research, the development of AI models and algorithms, and the application of AI in various industries.

Generative AI, which has experienced a spectacular boom in recent years, refers to a type of artificial intelligence that is able to create new content such as images, text, music or videos that resemble content created by humans. It uses machine learning algorithms, in particular generative models, to generate new content based on patterns and examples learnt from training data.

Computers with generative AI programmes such as DALL-E 2 and ChatGPT from OpenAI, the Stable Diffusion programme from Stability AI and the Midjourney programme are capable of generating content based on text requests from users. These programmes are trained by exposing them to a huge amount of existing works such as essays, images, paintings and other artworks.

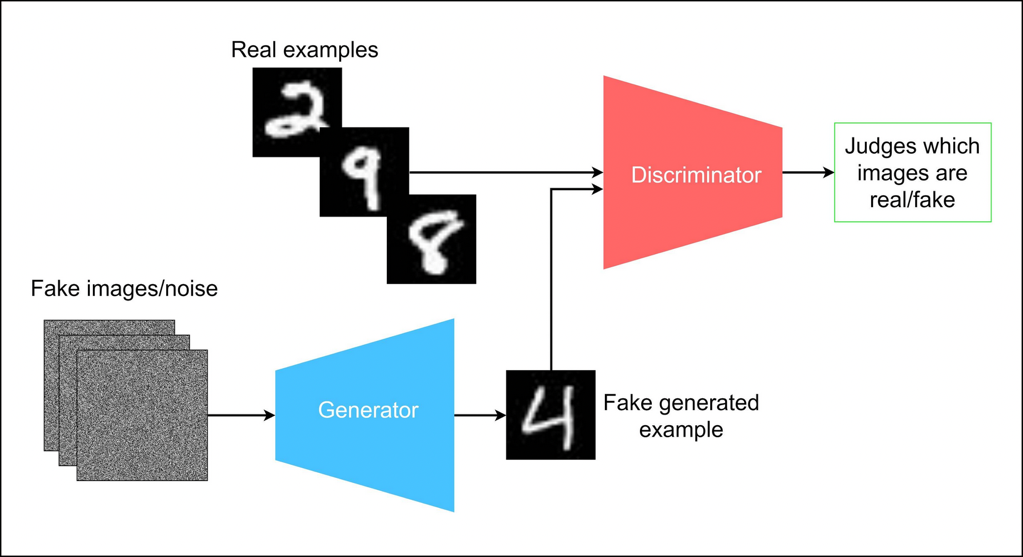

A popular type of generative model is the Generative Adversarial Network (GAN). GANs consist of two main components: a generator and a discriminator. The generator’s task is to generate new content, while the discriminator’s task is to evaluate the generated content and distinguish it from real content. The generator and the discriminator play a game in which they try to outperform each other, with the generator continuously improving its performance based on feedback from the discriminator.

However, the question naturally arises as to whether generative AI results can be protected by copyright, although most legal systems presuppose human creativity. Proponents of generative AI material argue that significant human involvement in processing the requests or input justifies entitlement to copyright protection.

Now, generative AI systems work by recognising and replicating patterns in data, similar to most machine learning programmes, but the data they rely on for training is predominantly human-generated and in many cases is subject to copyright protection, which inevitably lead to content that clearly infringes copyright laws. These are the four main reasons why the results of generative AI lead to legal difficulties:

- Ownership of generated content: when a generative AI system generates content such as images or text, it can be difficult to determine who owns the rights to that content. Traditionally, intellectual property rights are assigned to human creators, but in the case of generative AI, the lines can become blurred. Different countries may have different laws and regulations regarding the ownership of AI-generated content, leading to legal uncertainties.

- Copyright infringement: generative AI models are trained on large data sets that often contain copyrighted material. There is therefore a risk that the generated content may infringe existing copyrights. For example, a generative AI model trained on a dataset of copyrighted images may generate new images that are very similar to the copyrighted images, which could infringe the rights of the original creators.

- Plagiarism: generative AI models can be trained to produce text, such as articles or stories. In some cases, these models can produce content that is similar or identical to existing works, leading to accusations of plagiarism. This becomes problematic when the generated text is used for commercial purposes or presented as an original work without proper attribution.

- Right of publicity: the use of generative AI to create deepfake videos or images can raise legal issues related to the right of publicity. Deepfakes involve superimposing a person’s face on another person’s body or altering their appearance in a realistic way. If these deepfakes are used for malicious purposes, to spread false information or to defame people, for example, this can lead to legal consequences.

Generative AI offers a wide range of potential applications for companies and private individuals, but it is unfortunate that the promise of improved automation and autonomy is inherently linked to criminal activity.

Criminals adapt very quickly to new technologies and integrate them into their methods, which poses a major challenge for law enforcement agencies and the judiciary. Intellectual property (IP) crime is no exception, as generative AI can provide criminals with a variety of tools to support their illegal business models.

| IMMENSE CHALLENGES AHEAD

AI has changed the way we live and work, but it has also brought with it new concerns about disinformation and manipulation, as well as a range of other potentially negative effects on the organisation and behaviour of human society.

The risks posed by these technological advances emphasise the need for responsible AI development and the introduction of robust safeguards to protect against potential misuse. As artificial intelligence continues to develop at a dizzying pace, the voices warning of the potential dangers are getting louder and louder.

Geoffrey Hinton, the British-Canadian computer scientist and cognitive psychologist who is referred to as the “Godfather of AI” laid the foundations for machine learning and neural network algorithms in 2013, issued a stark warning in a sobering reflection: “These creations may one day surpass our intellect and dominate us, and it is up to us to urgently develop a strategy to thwart such an outcome.” In 2023, Hinton made the bold decision to leave his post at Google, fuelled by a burning desire to “shed light on the dark side of AI” while struggling with a sense of regret about the work that had defined his career.

Hinton’s premonitions reverberate through the halls of the scientific community and resonate with many of his colleagues.

Elon Musk, the visionary entrepreneur behind Tesla and SpaceX, stood shoulder to shoulder with an impressive coalition of over 1,000 tech luminaries when they released a highly publicised open letter in 2023. With unwavering conviction, they asked for a pause in the pursuit of grandiose AI experiments and sounded the alarm on the potential of this powerful technology to unleash profound dangers to society and the very nature of humanity.

From the increasing automation of certain professions, to the emergence of algorithms tainted by gender and racial bias, to the advent of autonomous weapons that function independently of human oversight, there are a multitude of concerns.

These fears are just the tip of the iceberg, as we have only begun to scratch the surface of AI’s true potential. The tech community has been discussing the potential dangers associated with artificial intelligence for some time now. Among the biggest concerns are the automation of workplaces, the spread of fake news and the dangerous arms race with AI-controlled weapons, all of which are among the biggest threats posed by AI.

| THE DARK SIDE

It is an undeniable fact that popular platforms like Google and Facebook have a deeper understanding of their loyal users than their closest family and friends. These technology giants amass colossal amounts of data for use in their artificial intelligence algorithms. A striking example of this is Facebook “likes”, which amazingly can be used to accurately predict a variety of personal characteristics of Facebook users: from sexual orientation, ethnicity, religious and political beliefs to personality traits, intelligence, happiness, addictive behaviour, parental separation experiences and even demographic factors such as age and gender.

This revelation, uncovered through a comprehensive study, offers just a glimpse into the vast amounts of information that can be extracted from the web of search terms, online clicks, posts and reviews. The implications are staggering and paint a vivid picture of the depth and breadth of knowledge being amassed by proprietary AI algorithms.

This problem goes beyond large technology companies. When AI algorithms play an important role in people’s online activities, it can be risky. For example, while the use of AI in the workplace can increase a company’s productivity, it can also lead to lower quality jobs for employees. Furthermore, AI decisions can be biassed and lead to discrimination in areas such as hiring, access to bank loans, healthcare, housing and more.

The use of AI to automate workplaces is a growing concern as it becomes more prevalent in industries such as marketing, manufacturing and healthcare. According to McKinsey, up to 30% of labour hours in the US economy could be automated by 2030, with black and Hispanic workers particularly affected (Source: https://www.mckinsey.com/mgi/our-research/generative-ai-and-the-future-of-work-in-america). Goldman Sachs has even predicted that 300 million full-time jobs could be lost due to AI automation. (Source: https://www.goldmansachs.com/intelligence/pages/generative-ai-could-raise-global-gdp-by-7-percent.html).

As AI robots become increasingly intelligent and powerful, fewer human workers will be needed to perform the same tasks. Although it is estimated that tens of millions of new jobs will be created by AI in the coming years, many workers may not have the necessary skills for these technical positions and could be left behind if companies do not provide training and development opportunities.

According to Chris Messina, the American technology strategist and inventor of the hashtag, areas such as law and accounting are likely to be heavily affected by AI, with medicine among the most impacted. Messina predicts that law and accounting will be next, with the legal sector facing a major upheaval. “Think about the complexity of contracts, and really diving in and understanding what it takes to create a perfect deal structure,” he said in regards to the legal field. “It’s a lot of attorneys reading through a lot of information – hundreds or thousands of pages of data and documents. It’s really easy to miss things. So AI that has the ability to comb through and comprehensively deliver the best possible contract for the outcome you’re trying to achieve is probably going to replace a lot of corporate attorneys”.

| OPACITY KEY TO SUCCESS?

AI and deep learning models can be difficult to understand, even for people who use the technology regularly. This leads to confusion about how AI arrives at its decisions and what information it uses. It also makes it difficult to understand why AI sometimes makes unfair or risky decisions.

One potential danger of AI is its ability to manipulate human behaviour, which has not yet been sufficiently investigated. Manipulative marketing tactics have been around for some time, but the combination of AI algorithms and the vast amount of data collected has greatly enhanced the ability of companies to influence users towards more profitable decisions. Digital businesses can now shape the context and timing of their offers and target individuals with manipulative strategies that are more effective and harder to detect.

A striking example is provided by the American retailer Target; it has used AI and data analytics to predict whether women are pregnant in order to send them discreet adverts for baby products (Source: https://www.driveresearch.com/market-research-company-blog/how-target-used-data-analytics-to-predict-pregnancies). Uber users have reported that they have to pay more for rides when their smartphone battery is empty, even though battery level is not officially a factor in Uber’s pricing model. Large technology companies have often been accused of manipulating search results to their own advantage. One well-known example is the European Commission’s decision against Google’s shopping service in 2017 when the company was fined €2,42 million for abusing its dominance as a search engine. Facebook was also fined a record amount by the US Federal Trade Commission for violating the privacy rights of its users, resulting in a lower quality of service.

| SOCIAL MANIPULATION THROUGH ALGORITHMS

This fear has become a reality as more and more politicians use social media platforms to spread their messages. TikTok is just one example of a social media platform that uses AI algorithms and displays content to users based on their previous usage behaviour. However, the app has been criticised for its algorithm’s inability to filter out harmful and inaccurate content, leading to concerns about TikTok’s ability to protect its users from misinformation.

The situation has become even more complicated with the advent of AI-generated images, videos and voice changers, as well as deepfakes in the political and social sphere. With these technologies, it is easy to create realistic photos, videos and audio clips or replace the image of a person in an existing image or video with a different one. As a result, malicious actors now have another way to spread misinformation and propaganda, which can make it almost impossible to distinguish between reliable and fake news.

| SOCIAL SURVEILLANCE WITH AI TECHNOLOGY

In addition to the potential existential threat, many are also concerned about the negative impact of AI on privacy and security. For example, China is actively using facial recognition technology in various places such as offices and schools, which not only tracks a person’s movements but could also allow the government to monitor their activities, relationships and political views.

In the US, police departments use predictive policing algorithms to predict where offences will be committed. However, these algorithms are influenced by arrest rates that disproportionately affect black people, leading to excessive policing and raising the question of whether democracies can prevent AI from becoming an authoritarian tool. Many authoritarian regimes are already using AI, and others will certainly also use it, but the question is: to what extent will it infiltrate Western democracies and what restrictions will be imposed on it?

The lack of data protection when using AI tools is also a major problem. If you have interacted with an AI chatbot or used an AI face filter on the internet for example, your data is being collected – but do you know where it is being sent and how it is being used? AI systems often collect personal data to customise the user experience or improve the AI models used, especially if the AI tool is free. Collecting this data is an important aspect of AI technology.

| WEAKENING OF ETHICS AND GOODWILL

Now, religious leaders are also expressing concern about the potential dangers of AI. At a meeting in the Vatican in 2023 and in his message for the 2024 World Day of Peace, Pope Francis called on countries to conclude and implement a legally binding international agreement to regulate the development and use of AI.

Pope Francis warned of the possibility that AI can be manipulated and “generate statements that appear credible at first glance but are unfounded or reveal prejudices.” He emphasised how this could contribute to disinformation campaigns, mistrust in the media, election interference and more, ultimately increasing the likelihood of “fuelling conflict and hindering peace.”

The rapid emergence of generative AI tools underpins these fears as numerous individuals use this technology to avoid writing assignments, jeopardising academic honesty and originality. In addition, biassed AI could be used to assess a person’s suitability for employment, mortgage, welfare or political asylum, which could lead to unfair treatment and discrimination, Pope Francis said.

“The distinctive human capacity for moral judgement and ethical decision-making is more than a complex set of algorithms”, he explained. “And this capacity cannot be simplified by programming a machine.”

| AI-POWERED AUTONOMOUS WEAPONS

Unfortunately, technology has often been used for warfare, and the advent of AI is no exception. In 2016, over 30,000 people, including AI and robotics researchers, expressed their concerns about investing in AI-powered autonomous weapons in an open letter which emphasised the critical choice facing humanity: to prevent or initiate a global AI-driven arms race. It warned that an arms race would be likely if a major military power were to push ahead with the development of AI weapons, with autonomous weapons being the weapon of choice in the future.

This prediction has come true in the form of lethal autonomous weapon systems (LAWS) that can identify and attack targets autonomously with minimal regulatory oversight. The proliferation of advanced weapons has raised fears among powerful nations and led to a technological cold war.

While these new weapons pose a significant risk to civilians, the danger escalates when autonomous weapons fall into the wrong hands. Given the capabilities of hackers in carrying out cyber attacks, it is not difficult to imagine that malicious actors could infiltrate autonomous weapon systems and cause catastrophic consequences.

Uncontrolled political rivalries and aggressive tendencies could lead to the malicious use of artificial intelligence. Some people fear that the pursuit of profit will continue to drive research into the possibilities of AI, despite the warnings of influential figures. After all, the mentality of pushing the boundaries and monetising technology is not unique to the AI sector, but a recurring pattern throughout history.

| SELF-AWARE AI AND LOSS OF HUMAN INFLUENCE

Excessive reliance on AI technology risk diminishing human influence and compromising certain aspects of society. For example, the use of AI in healthcare can lead to a decline in human empathy and critical thinking. Similarly, the use of generative AI for creative activities could undermine human creativity and emotional expression. Excessive interaction with AI systems may even lead to a decline in peer communication and social skills. While AI can undoubtedly help with the automation of routine tasks, there are concerns about its potential to compromise human intelligence, skills and the importance of community.

There are growing fears that AI intelligence will advance to the point where it becomes sentient, beyond human control and potentially malicious in its behaviour. Reports of alleged cases of AI sentience have already surfaced, including the well-known account of a former Google engineer, Blake Lemoine, who in 2022 claimed to have had conversations with the AI chatbot LaMDA, which he perceived as sentient and conversing like a human child. As AI progresses towards artificial general intelligence and eventually artificial superintelligence, calls are growing louder to stop these developments altogether.

| DEVELOPING LEGAL REGULATIONS

If AI systems are developed by private companies with the main aim of making a profit, there is a risk that these systems will manipulate user behaviour to the company’s advantage instead of putting the user’s preferences first. To protect the autonomy and self-determination of humans in the interaction between AI and humans, it is crucial to establish guidelines that prevent AI from subordinating, deceiving or manipulating humans. In 2019, a high-level expert group on AI presented the European Commission’s Ethics Guidelines for Trustworthy AI. According to the Guidelines, AI should respect all applicable laws and regulations, respect ethical principles and values and be robust from a technical perspective.

To ensure human autonomy in AI interactions, the first crucial step is to improve transparency regarding the functions and capabilities of AI. Users should have a clear understanding of how AI systems work and how their information, especially sensitive personal data, is used by AI algorithms. Although the European Union’s General Data Protection Regulation (GDPR) aimed to increase transparency through the right to explanation, it was not totally successful.

It is often claimed that AI systems are like a “black box”,” which makes transparency difficult. However, this is not entirely true when it comes to manipulation. The creators of AI systems can introduce certain restrictions to prevent manipulative behaviour, so it is more a question of design and the objective function. Algorithmic manipulations should in principle be explainable by the designers who developed the algorithm and monitor its performance.

According to experts in the field, three important steps should be taken to ensure that the transparency requirement is met by all providers of AI systems:

- Introduce human oversight: the European Union’s draft Artificial Intelligence Act (AIA) proposes that providers of AI systems should establish a human oversight mechanism to monitor the performance and output of the system.

- Establish an accountability framework: Human oversight should include a robust accountability framework to incentivise providers to comply.

- Provide clear information: Transparency should not be provided through complex messaging that confuses users. Instead, there should be two layers of information about AI systems: a concise, accurate and easy-to-understand summary for users and a more detailed version available to consumer protection authorities upon request.

There is no doubt that artificial intelligence has become a transformative force that is revolutionising industries and reshaping the way we live, work and interact. Its potential to increase efficiency, drive innovation and solve complex problems offers immense benefits – from improving healthcare and education to tackling climate change and beyond. However, the advancing development of AI also raises important ethical, social and economic questions. But we must also be aware of its potential dangers. These include concerns about privacy, job displacement and the risk of AI systems being manipulated for profit, malicious intent or even promoting pro-war policies.

To navigate this complex landscape, it is essential to create a solid ethical framework, promote transparency and foster collaboration between governments, businesses and society at large. In this way, we can harness the power of AI to create a more prosperous, equitable and sustainable future for all.